Optimizing MySQL and PostgreSQL: A Real-World Developer's Guide

Published On: Jun 29 2025

Written By: Krishnan Sethuraman

Category: Server Mechanic

Slow MySQL queries are silent killers. They don't just hurt application speed — they directly affect user experience, SEO rankings, server costs, and in extreme cases, your business's reputation. Yet, many developers don’t give databases the attention they deserve until performance issues explode.

In this article, we’ll dive deep into MySQL optimization strategies — from schema design to indexing, query tuning, configuration, and scaling. Whether you're running a WordPress site or a complex SaaS backend, this guide will help you optimize effectively.

This article is result of my experiences with Mysql databases but they work very well with PostgresSQL databases as well. I have applied the same strategies in Postgres for UK Rail cards when I was managing their infrastructure.

Understand the Root Causes of Slowness

Before beginning to work on optimizing the database it is important to find out what's causing the database to slow down.

I would normally create a read replica of the database and perform all my analysis on it. This prevents human errors on the production database.

There are various reasons for the mysql server to slow down. Some of the common culprits are:

- Poorly written queries

- Missing indexes

- Suboptimal schema design

- High concurrency load

- Misconfigured MySQL settings

- Insufficient hardware or I/O bottlenecks

Schema Design Tips for MySQL

Designing the schema ie. the structure of the database is an important part of launching an application that does not fall apart in production.

It is understandable that no one can plan all the columns in a table beforehand. But a reasonable foresight on how the product will evolve and designing the database accordingly will help us keep the tech burden to the minimum in the future.

Points to note

- Use proper data types

- Use

INTinstead ofBIGINTunless you really need 64-bit values. - Use

VARCHARinstead ofTEXTfor searchability and indexing. - Avoid

ENUMif you'll need to change values often.

- Use

- Avoid NULLs in indexed columns

- They reduce index effectiveness in InnoDB.

Indexing for Speed

In my experience most of the time lack of right indexes in the tables are one of the main culprits behind a slow database and poor performing application.

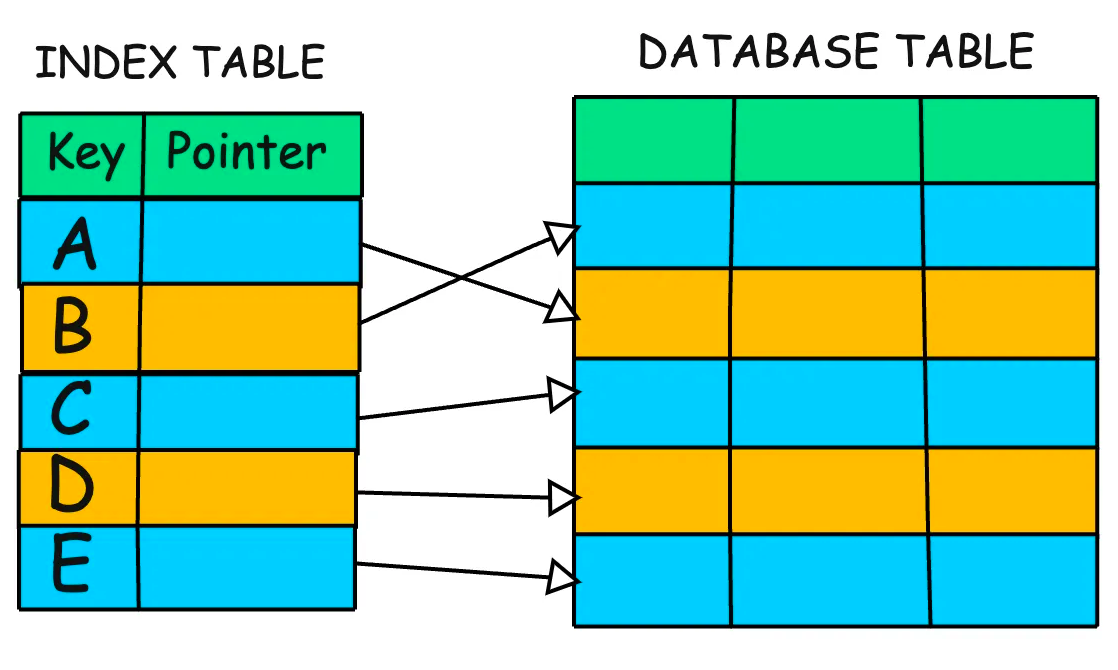

To understand indexes in a table, let's imagine reading a book. If I have to read a particular topic then I will first check for the page number of the said topic in the table of contents and then go to that page and read.

Now imagine not having to reach the same topic without having to look at the table of contents. It might take forever as we will have to flip through the pages and then eventually reach the desired page.

In the same way when a query is executed on a non-indexed table the system scans the whole table before retrieving and displaying the result. This is a slow process and hence slows the query down.

In the event of the table with hundreds and thousands of rows this can even crash the whole database server.

What do we do?

To create indexes we need to first identify the non indexed queries. This can be done by enabling non-indexed query logging in MySQL and letting it be for a week.

After a week you should have a list of queries that have not been indexed.

Now we apply indexes first in the staging environment and then after thorough testing we move to the production environm

ent. Please take snapshots or backups of the database before applying these changes.

Creating Indexes

An index in MySQL is a data structure that improves the speed of data retrieval operations on a database table at the cost of additional space and slower write operations.

Why Use Indexes?

Indexes help:

- Speed up

SELECTqueries - Support

WHERE,JOIN,ORDER BY,GROUP BY - Improve performance for large datasets

Example

CREATE INDEX idx_department ON employees(department);

Creating Composite Indexes

A composite index (also known as a multi-column index) is an index created on two or more columns of a table. It's used to speed up queries that filter or sort on multiple columns together.

Why Use Composite Indexes?

They are useful when:

- You often query by multiple columns together.

- You have

WHEREclauses orORDER BYusing several columns.

Example

CREATE INDEX idx_region_product ON sales(region, product);

Rules of Thumb

- Always index columns used in

WHERE,JOIN,ORDER BY, andGROUP BY. - Use composite indexes wisely — the order matters.

- Use covering indexes to avoid reading table rows entirely.

- Avoid indexing low-cardinality columns (e.g., is_active).

Optimise slow queries

The next step in optimising a database is to look into the queries and optimise them.

Developers often use the ORM that comes along with the database to write queries. There is nothing wrong in but most of these queries are written as,

Posts::where(‘id’, $id)->first();This query translates to,

select * from posts where id = $id;In that above example though the column used in where is indexed this is still a poorly written bad query as we are fetching all the columns from the posts table.

This is not required. A thumb rule in the database is to never write a query * . You need to specify the columns that you need and only fetch them.

So the above query needs to be rewritten as,

Posts::select(‘post’)->where(‘id’, $id)->first();This translates to

select post from posts from id = $id;Now we are fetching only the post column. This query is now optimised and should perform faster than the old query.

If possible, totally ditching the ORM and writing raw Sql queries is also not a bad idea, but I normally leave this to the developer.

Dealing with large tables

While dealing with tables with large datasets, the best practice is to heavily rely on pagination.

Let's imagine that the posts table has over 10,000 posts with published status as ‘yes’.

To fetch the posts never write a query like this,

select post from posts where published = ‘yes’;Instead this should be optimised as,

select post from posts where published = ‘yes’ order by id desc limit 20;In the first query we are fetching all the 10,000 rows. This is a considerable amount of load on the database. But in the second query we have optimised it to only fetch the most recent 20 posts. This speeds up the query and also reduces the amount of load on the database.

TLDR

- Never use queries as

SELECT * FROM - Use

EXISTSinstead ofIN. It is faster in most cases.

Cache Aggressively

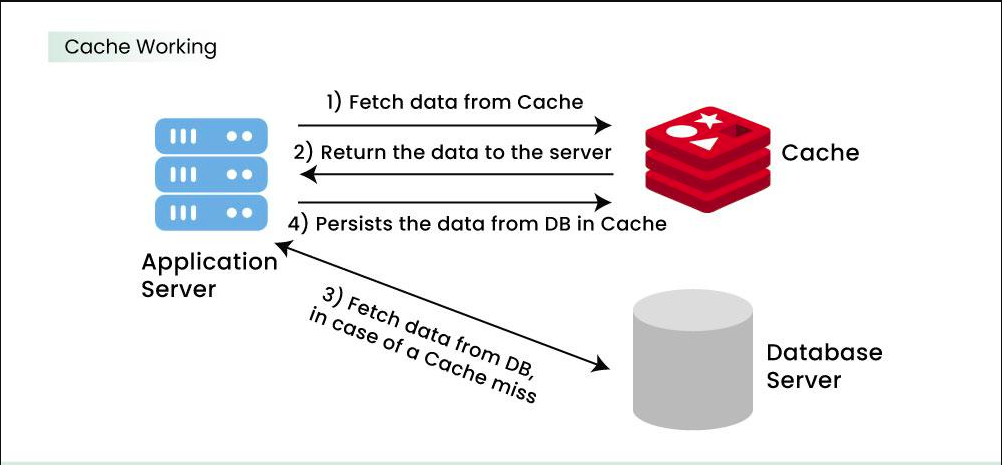

Every time I am asked to do database optimization I always include caching in it. There is no point in hitting the database to run queries if the data remains unmodified.

My preferred caching application is Memcached. It’s quick to set up and easy to use. It also supports clustering. Unlike Redis, Memcached does not support data persistence which means if the Memcached server is restarted, you lose all your data in the caching layer.

This is not a deal breaker for most applications as the source of data is not the caching layer.

It is a good idea to cache expensive SELECT queries and also data that does not change often. For example information like gender and username do not change so it is a good idea to cache them and retrieve the data from the cache.

An effective caching strategy will drastically reduce the load on the database and make it more efficient and also reduce costs in terms of server infrastructure.

Monitoring and Observability

If you are managing your infrastructure then it is also your duty to monitor your infrastructure. Monitoring does not mean staring at a screen every minute. You can set up the right tools and automate the process of sending alerts in the form of sms, email or slack messages.

I love Percona Monitoring and Management. It is built specifically for Mysql and comes from the house of Mysql experts, Percona.

It gives deep insights like slow queries, query analytics, replication, buffer pool, connections and few more.

If you are running RDS then you can also set up Cloudwatch alarms to receive important alerts via email or sms.

Conclusion

MySQL optimization is a journey, not a one-time task. The key is to monitor continuously, write intentional queries, design smart schemas, and cache what you can. Always validate changes with data, not assumptions.

Related Articles

- How to Boost Your Laravel Application’s Performance

- Database Indexes: A detailed guide (external)

- Caching - System Design Concept (external)

Krishnan Sethuraman

Founder & CTO of Geedesk. Passionate about building software from scratch, launching SaaS products, and helping teams deliver enterprise-grade solutions.

Like what you are reading?

Discover more similar articles sent to your email

Subscribe to my newsletter